Provision in Spending Bill Could Protect Health Insurers From AI-Accountability

The measure would shield health insurers’ use of AI-powered systems to deny care and coverage from state oversight for a decade.

Tucked into House Republican’s federal budget reconciliation bill is a provision that hasn’t made many headlines — but it should. The measure calls for a sweeping 10-year freeze on any state or local regulation of artificial intelligence systems.

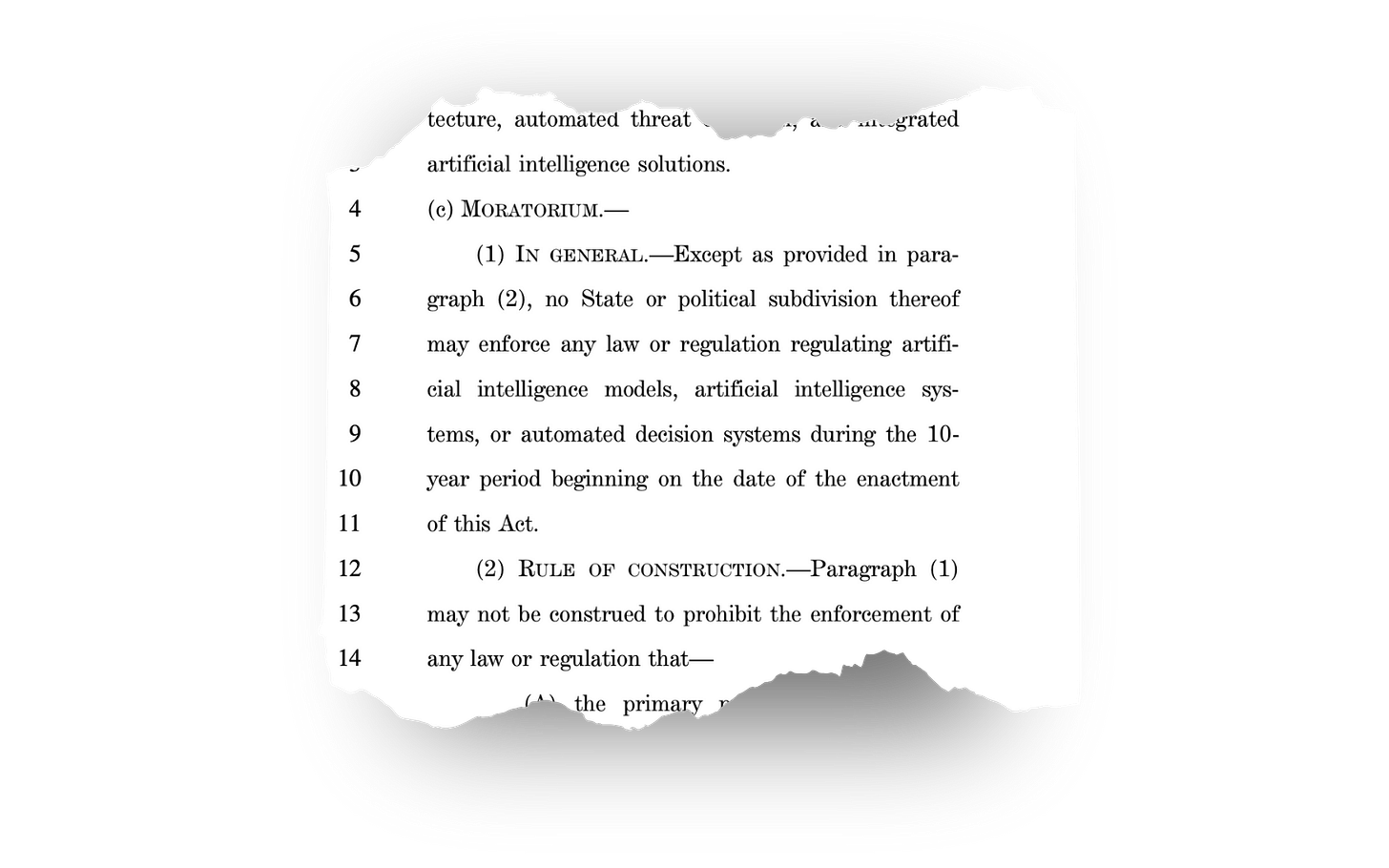

Introduced by Rep. Brett Guthrie (R-Kentucky), the added language states that “no State or political subdivision thereof may enforce any law or regulation regulating artificial intelligence models, artificial intelligence systems, or automated decision systems” for the next decade. It’s a proposal with potentially far-reaching consequences for consumers, especially when it comes to how insurers and health systems use technology to determine what care people do — or don’t — receive.

The rider is part of a much larger tax and spending package, which also includes significant cuts to Medicaid and changes to cost-sharing for low-income patients. But the AI language opens up a whole other can of worms.

The Health Care Connection

Big health insurers have increasingly embraced AI and algorithmic systems to automate decisions around coverage, billing and care approvals.

As highlighted by STAT News, Cigna used a computer algorithm to automatically deny claims in bulk, with medical directors reportedly spending only seconds “reviewing” each case.

It’s not just one insurer. UnitedHealth used an AI algorithm that reportedly has a 90% error rate in reviewing requests for care by patients, leading to an untold number being inappropriately denied medically necessary care. Because of revelations like this, some states have introduced guardrails.

In response to the inappropriate use of AI to deny care and claims, some states have enacted laws to hold health plans more accountable. For instance, California passed a law requiring disclosure when AI is used to communicate clinical information to patients; Colorado now requires companies to protect consumers from algorithmic discrimination; and New York mandates bias audits of AI tools used in hiring. The problem with Congress’s spending bill is that it would deem those state laws unenforceable for the next decade.

Critics Sound the Alarm

More than 100 organizations, from academic institutions to workers' rights groups, signed onto a letter sent to Congress earlier this week urging lawmakers to strike the provision. The letter warns:

“even if a company deliberately designs an algorithm that causes foreseeable harm—regardless of how intentional or egregious the misconduct—the company making or using that tech would be unaccountable to lawmakers and the public.”

It’s worth noting that this concern isn’t limited to watchdogs and digital rights activists. Even some of the very people building these systems are asking for oversight. OpenAI CEO Sam Altman testified before Congress that regulatory intervention is “critical to mitigate the risks” of advanced AI.

A Free Pass for AI in Health Care?

I doubt this provision was inserted with health care in mind (AI companies have been increasingly currying favor with the White House and Congress) but that’s not the point, and the implications are clear: If passed as written, it would remove a key layer of accountability just as AI systems are becoming central to health care decision-making.

For patients, it means fewer protections from health insurers outsourcing care to a bunch of 1’s and 0’s and digital decision trees. And for big health insurers, it offers an invaluable gift: cover.

Lawmakers on both sides of the aisle have said they support “responsible AI” and commonsense protections. They should use this opportunity to reject the idea that powerful technologies should be immune from state oversight, especially when they’re making life-and-death decisions about Americans’ health.

P.S Another A.I./Health Care Provision Worth Noting

There’s another little-noticed provision in the same budget bill that also deserves attention: It directs the Department of Health and Human Services (HHS) to use artificial intelligence to root out “fraud” and recover “overpayments” in the traditional Medicare program.

David Lipschutz, with the Center for Medicare Advocacy, warned that improper payments are often due to minor paperwork errors — not actual fraud — and that leaving these nuanced decisions up to algorithms could lead to wrongful denials or clawbacks.

Lipschutz told Rolling Stone:

“What would be the opportunities or mechanisms to correct erroneous decisions from AI? Would beneficiaries be held harmless if an AI tool determines that services they already received were improper? Oftentimes, whether a given item or service is proper or medically necessary is a nuanced analysis that can include clinical judgment and application of complex coverage criteria, and should be an individualized assessment for a specific individual. It would be dangerous to leave this important analysis to an algorithm.”

So, while one part of the bill would shield AI-driven coverage denials from state scrutiny, another aims to ramp up the use of AI to police Americans and their doctors. All while its been widely reported that overpayments to private Medicare Advantage insurers ($140 billion annually by one analysis) vastly eclipses any alleged fraud or overpayments in the traditional medicare program.

Such a pathetic way to cause people to suffer and die. No one can be held accountable for deadly decisions. What an evil way to make money.

My knee-jerk reaction is - What a stupid and nonsensical proposal!

And I'm beginning to think people making these recommendations, and supporting them, should be in less harmful positions.